Project Target

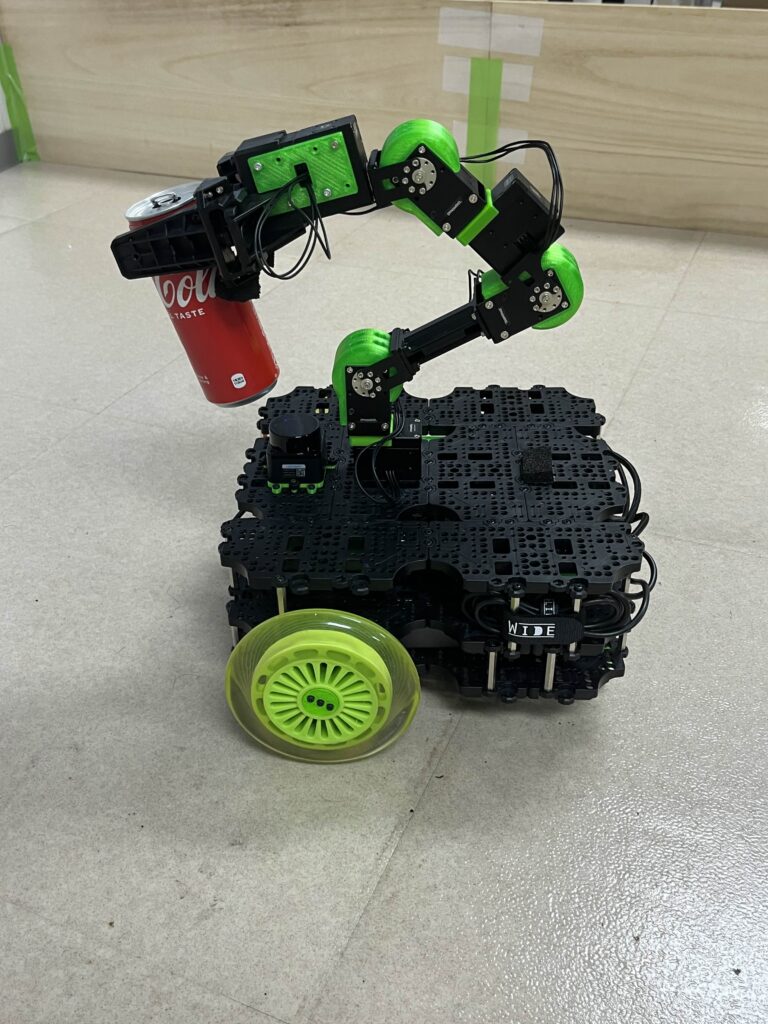

The TurtleBot3 Lime is a next-generation TurtleBot based on the TurtleBot3 Waffle with adding the NVIDIA Jetson Orin Nano, one of the most representative edge GPUs, and a six-degree-of-freedom arm. Many AI robots are beginning to be used in various industrial settings, and ROS will become an indispensable element as a bridging mechanism connecting AI algorithms and robot hardware. Lime is intended to be used as a training environment for personnel who develop and operate AI robots using ROS, as well as an environment for developing new related technologies.

Deep learning techniques are commonly utilized to implement most modern AI mechanisms, as is well known. For robot implementation, the most frequently used ones are:

- Cognitive mechanisms, especially image recognition

Image processing using deep learning provides essential functions for object recognition, posture recognition, etc. - Motion control, planning

There have been many attempts to realize navigation and manipulator motion planning, as well as integrated motion planning that combines both, using deep reinforcement learning. - Task planning and group control

Task planning is a planning hierarchy that is more abstract than motion planning. Its main purpose is to generate the robot’s action procedures necessary to achieve a goal. As its extension, a mechanism for planning not only the actions of a single robot but also the actions of multiple robots in a collective manner to improve the total operational efficiency of robots is called a fleet control mechanism.

Recently, methods using world models and reinforcement learning have been attempted for various robot planning. In addition, attempts have been made to use LLMs for symbol-based higher-level task planning. - Interpersonal communication

By using LLMs, it is possible to communicate with robots in natural language and interpret commands.

It is expected that AI technologies will be used in almost all functional hierarchies of robot control mechanisms. Therefore, it is extremely important to be able to utilize them and create new robots by using them.

To achieve this, it is necessary to consider not only individual algorithms, but also the architecture for configuring the system and the development method. The robot system architecture relies on the properties of deep learning as a system component, and so previous architectures based on non-deep learning methods need to be reconsidered accordingly.

Recently, for example, many robot control algorithms have been provided by NVIDIA Isaac and other open source repositories. Therefore, the main challenge is to test and examine the architecture and development process for effectively utilizing them using actual robots. With this goal in mind, Momoi.org is proceeding with the project in stages.

Discovering ways to use AI technology

Recently, deep learning technology has made remarkable progress. Algorithms that can be used for robot control are being developed one after another at short intervals. In order to make effective use of them, it is necessary to define a specific field of use and implement applications in that field to evaluate the applicability of each technology to the application and the user value that will be created as a result. In the course of this process, it may be necessary to improve algorithm in some cases. For these reasons, Momoi.org is developing experimental applications for several robots, including Lime.

Architecture considerations

Since implementing all of the deep learning control algorithms on the robot side is inefficient in terms of resource consumption, functions must be shared with the server side. Image recognition and motion planning are particularly effective when performed locally. Image processing generates a large amount of data, and is prone to problems when transmitted over a network, so it is desirable to be able to process it locally on the robot if possible.

For this reason, when building a system using Lime, it is necessary to be able to execute image recognition and motion planning locally and seamlessly connect to other functions executed on the server. In this way, in order to be able to appropriately combine a large number of element functions to build the necessary functions, it is important to build a general-purpose architecture for versatile AI robot applications on a distributed system consisting of Lime and a server.

A robot is a real-time system that processes external information from sensors, recognizes the environment in which it is placed, and performs planning based on that, and the architecture must have a structure suitable for realizing this.

Consideration of the development process

In general, a large amount of training data is required for deep learning to function. In order to reduce the cost of preparing this data, the generation of training data in a digital twin environment is attracting attention. Momoi.org is developing an environment by NVIDIA Omniverse that allows robot AI application development without using actual machines.

Project repositories

Multiple projects are underway, each with its own repository on GitHub. Currently,

- pytwb library: A library with ROS project management and Python xml Behavior Tree description functions.

- ros_actor library: A library to facilitate real-time programming by providing a function to automatically allocate threads, since real-time programs that use a lot of callbacks are difficult to both create and read.

- LimeSimulDemo: A collection of the simplest Lime demo programs. Using Behavior Tree, it implements things like picking coke cans.

Following this, we plan to release examples of applications using Isaac, virtual factory definitions on Omniverse, etc.